In recent months, the AI Act has been increasingly pushed forward by the EU and is intended to provide European companies with a secure framework for using AI in everyday life. But what does this mean for companies that want to use AI as end users?

That’s what this article is about.

Table of Contents

What is the AI Act and why was it introduced?

The AI Act is a European Union regulation on the regulation of artificial intelligence (AI) in the European Union. It aims to create a common regulatory and legal framework for AI.

The AI Act was introduced to set clear boundaries for the use of AI, in particular to reduce the risk of AI applications that violate human rights or perpetuate prejudice. This concerns, for example, the scanning of CVs with gender bias, the ubiquitous surveillance of public spaces by AI-controlled cameras or the analysis of medical data that affects health insurance. The AI Act also aims to ensure that fundamental rights, democracy, the rule of law and environmental protection are protected from high-risk AI, while promoting innovation to make Europe a leader in this field.

Companies that use or sell AI systems in a certain risk category must implement certain measures in accordance with the AI Act. A risk-based approach is taken to the regulation of AI, whereby AI systems are categorised into different risk categories according to certain criteria. In addition, a two-year period is granted after the adoption of the AI Act to fulfil the aforementioned requirements.

Significance of the AI Act for European companies as manufacturers of AI systems

The AI Act applies to both manufacturers and companies that want to use AI in everyday life. Depending on the risk assessment of such an AI system, stricter or less stringent safety requirements must be taken into account.

Obligations and compliance:

- Companies must utilise existing compliance structures and best practices in the field of machine learning to meet the requirements of the regulation

- Early implementation of holistic AI governance can give companies a competitive advantage in terms of time-to-market and the quality of high-risk AI systems

Rights and responsibilities:

- EU citizens are given the right to lodge complaints about AI systems and receive explanations if they are affected by high-risk AI systems and these systems affect their rights

- Organisations should combine the capability, quality, compliance and scaling of AI systems to successfully deploy AI in the European market, especially against competitors in Asia and the US

Impact on companies as end users of AI

For many of our end customers, who are not providers of AI systems themselves but users, the question arises as to how the AI Act will affect the use of AI in their company.

The specific requirements result primarily from the categorisation of the application’s risk class. If, for example, companies use a system that automatically evaluates and pre-sorts CVs in the HR department, it must be transparent which data was used to train the evaluating AI model. The end users need to know what biases an AI may have (e.g. towards discrimination).

The specific obligations have not yet been finalised (as of May 2024), but are as follows

- The establishment, documentation and maintenance of a risk management system.

- Adherence to data governance and data management procedures for the training, validation and test data sets to be used, including relevant data preparation processes such as annotation, labelling, cleansing, enrichment and aggregation and a prior assessment of the availability, quantity and suitability of the required data sets.

- The maintenance of technical documentation.

- Supervision by human personnel.

- Mandatory recording of processes and events so that they are automatically recorded throughout the life cycle of the AI.

- Transparent information for users, including the characteristics, capabilities and performance limits of the high-risk AI system.

- Cybersecurity.

Obligations when using amberSearch

The use of amberSearch remains relatively unaffected by the onerous obligations, as the use of amberSearch represents a minimal risk. amberSearch is used as an assistance system by employees. As such, it does not make any independent decisions, but merely assists employees in the fulfilment of their tasks. By publishing certain AI models and their training data sets, documentation and, for example, compliance with the GDPR, we fulfil the requirements for enterprise search systems such as amberSearch.

Risk categorisation of various AI solutions for the AI Act

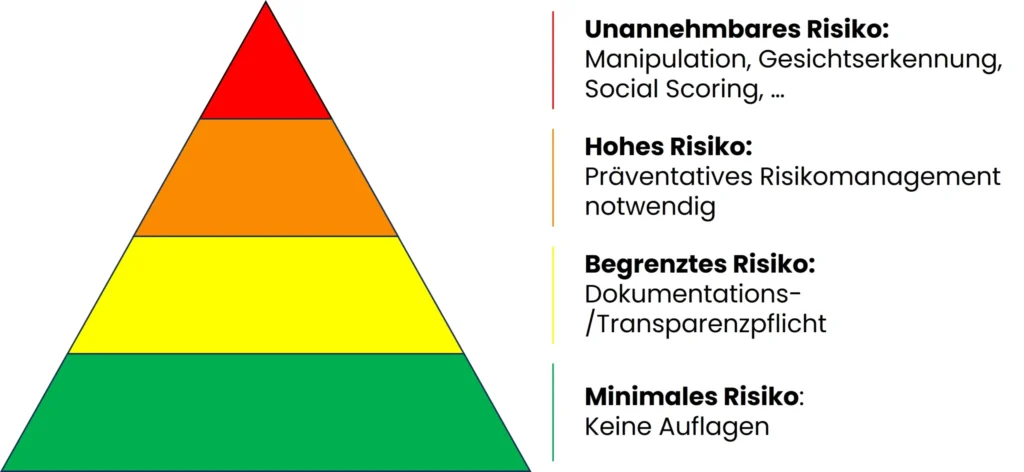

The European Union’s AI Act classifies AI solutions into four risk categories: unacceptable or unacceptable, high, limited and minimal risk. AI systems with unacceptable risk, such as social scoring by governments, are prohibited. High-risk systems, including those used in critical infrastructure, law enforcement or education and employment decisions, are subject to strict requirements and must undergo extensive compliance testing. Limited risk includes systems such as chatbots that must fulfil clear transparency requirements. Low-risk applications, such as simple AI-supported games, are largely unregulated. The classification aims to promote innovation while minimising risks to society. An overview of the risk categories can be found here:

Overview of the risk categories of the AI Act

Opportunities and challenges for companies

The introduction of the AI Act is accompanied by various opportunities and challenges, which we will summarise below:

Opportunities:

- Promotion of innovation and competitiveness through regulatory sandboxes in which companies can test innovative AI solutions under supervision

- Strengthening transparency and trust, as the Act introduces transparency requirements for AI systems, which is particularly helpful for SMEs

- Establishing global standards that help European companies remain internationally competitive

Challenges:

- Extensive administrative requirements such as effective risk management, quality assurance and proactive information, which can be a hurdle for start-ups and SMEs in particular

- Uncertainties due to unspecific wording in the law and possible sanctions

- High costs for the operational and technical implementation of regulatory requirements

- Stricter regulation of generative AI models, which could disproportionately burden European companies and hinder innovation

The final implementation of the AI Act and the reaction of the market will show which of the opportunities and challenges will ultimately have which influence.

Criticism of the AI Act

Even if the AI Act has good intentions at its core and promotes the safety of AI applications, it also restricts the ability of European companies to innovate. The effort involved in testing, certification, documentation, etc. costs resources that slow down the development of new innovations. This can lead to European companies losing ground compared to American providers.

The AI Act in Future

The AI Act is currently still in its infancy and the actual implementation will follow. Over time, both the AI Act and the necessary regulation etc. will certainly be adapted. At the moment, it is important for providers and users to deal transparently with the technologies and solutions in order to create trust and build knowledge together.