Information is not only located in different places within the company. Customers of T-Systems’ Open Telekom Cloud (OTC) can find information not only on the official website, but also in technical help pages, community notes and other sources. Users therefore first had to know where to look for an answer.

Table of Contents

The first freely available and externally directed AI chatbot from amberSearch has been available on the OTC website since July 2024. As an expert in cross-system chat and search for internal use cases, amberSearch has long been a partner of Deutsche Telekom via Techboost and also hosts its software on the OTC as standard. The existing infrastructure has now been used to make the public OTC knowledge centrally accessible – in compliance with the GDPR, of course.

Development of the AI chatbot

For the realisation, the strengths of artificial intelligence were combined with Retrieval Augmented Generation (RAG). The chatbot, including connection to the various systems, was realised in just 7 weeks.

“As Open Telekom Cloud, we have various points of contact with artificial intelligence,” says Norbert Brüll, Senior Service Manager, “as an AI provider, we are pursuing various AI initiatives with our partners”. These include the provision of development and training environments (e.g. via ModelArts), data pipelines, the GDPR-compliant operation of AI services and the offer of an LLM Hub.

“With the current project, we have changed our perspective and put ourselves in the shoes of an AI user. We wanted to explore the possibilities of AI for ourselves and our customers,” says Brüll. The use case was quickly found – a typical enterprise problem also prevails in the Open Telekom Cloud: a lot of data is “scattered” in many different places. Users (both internal and external) often have to search until they find the right information. The solution – in AI times – lies in an intelligent search assistant that taps into all sources and summarises the information in a handy answer.

“An enterprise search or intelligent knowledge management reduces search efforts in such cases by an average of 40 percent,” explains Bastian Maiworm, co-founder of amberSearch, “the productivity gains are obvious.”

In May 2024, amberSearch and the Open Telekom Cloud launched the project to get the new AI-based chatbot up and running. The basis for this were services that the start-up had already developed and which are now available quickly and easily as a standard product. These include the semantic search “amberSearch” and the question-answer system “amberAI“, which are based on a self-developed and trained Large Language Model (LLM). In the basic version, the amberSearch services act as a kind of ChatGPT to find and chat with the company’s internal knowledge.

“However, the internal use case was not the intention of our Open Telekom Cloud Chatbot,” says Brüll, “it was intended to help our users to quickly find relevant information and apply it for easy use of the cloud according to the motto ‘Find it fast’.”

Technical implementation of the AI chatbot

To do this, the amberSearch system needed access to the relevant sources. These include, for example, the Open Telekom Cloud website, the document library (Doc Library), community content and a collection of PDFs such as the service description. “To give the chatbot access to this information, we used a technology known as Retrieval Augmented Generation,” explains Maiworm.

“To our knowledge, we are the first public cloud provider to offer its customers a free LLM-based chatbot. The bot creates clear benefits for customer experience and user experience”

Norbert Brüll, Senior Service Manager, Open Telekom Cloud

When RAG is used, the AI model is not retrained or fine-tuned – this also eliminates the corresponding effort and costs. Instead, the chatbot co-operates with an enterprise search. When a question is asked, a search is first triggered in the connected sources. The information is made available to the AI model depending on the situation and then summarised into an answer. The information thus remains within the company or in the repositories in which it is already stored.

amberSearch offers various methods to make the data accessible to the bot. Specifically, an API is used for the multimedia content of the community, while the other sources are indexed several times a day using a crawler. The information is collected and standardised in a vector database. This database then acts as a consolidated basis for the concentrated Open Telekom Cloud knowledge from which the bot draws. The automated updates of the vector database ensure that the bot always has at least the latest knowledge.

The chatbot was created in just seven weeks. It has been running since mid-July. It gives users of the Open Telekom Cloud easy access to the latest knowledge they need; tedious searches are a thing of the past. The bot also documents the sources from which it has obtained the relevant information – this reduces the likelihood of hallucinations.

“With the AI chatbot for the Open Telekom Cloud, we have demonstrated how easy it is to build a value-added AI service for knowledge management. Practically every company can benefit from such a blueprint”

Bastian Maiworm, Managing Director of amberSearch

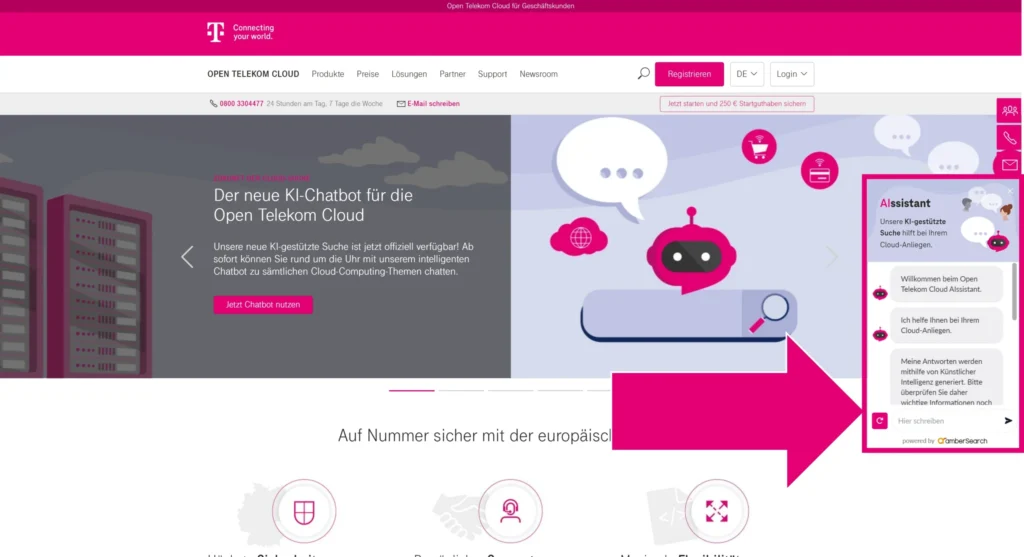

Accessibility of the chatbot

“Our chatbot is easily accessible via the support tab on the right-hand side of the screen (AI). Registration is not necessary,” says Brüll. “By operating the vector DB and the model within the Open Telekom Cloud, the team has full control over the AI. This also includes GDPR compliance,” adds Maiworm, “so that a second stage can also be realised if necessary, in which personal data is used via a login.”

Screenshot of the chatbot on the Open Telekom Cloud website

So – get the bot and ask it what you always wanted to know about the Open Telekom Cloud:

Incidentally, the bot is not just an information assistant, but can also help you in a very practical way. Norbert Brüll: ‘If you want to deploy a service, you don’t have to trawl through API documentation or community input, you can simply ask the bot for a deployment setup on a container basis – including code’.