According to several surveys, one of the biggest concerns when using generative AI is the consideration of data protection and security concerns. Some of the typical questions when using generative AI are the following:

- who is liable for AI-generated answers?

- Is the use of AI tools GDPR compliant?

- are the AI models trained with our data?

- who owns the copyright for AI-generated content?

Table of Contents

Having already looked at possible use cases and the technical implementation (without a deeper consideration of the legal aspect) in several other blog articles, this post is explicitly about the legal framework for the use of artificial intelligence. Firstly, we will therefore briefly define the GDPR:

What is the GDPR and why is it important when using AI?

The General Data Protection Regulation (GDPR) ensures that the data and privacy of individuals and companies are adequately protected. As data protection is a fundamental right, the GDPR aims above all to protect the personal data of European Union citizens. On the other hand, this in turn strengthens the trust of users and consumers.

Although the GDPR is an EU regulation, it has a global reach as a legal obligation and is seen as a role model by other countries. Those who implement the GDPR professionally take certain measures to ensure data security and prevent misuse.

Mandatory documentation of data processing also creates transparency, giving data subjects the opportunity to find out how their data is handled.

In addition to the various opportunities offered by generative AI, such as greater efficiency and productivity, time and resource savings and improved knowledge management, there are also risks such as data security, data protection, the risk of misinformation or plagiarism, as well as copyright issues and ethical violations.

In order to exploit the opportunities of generative AI in the company, the legal and technical design of the use of generative AI is primarily about leveraging the added value as efficiently as possible while minimising the risks.

Legal requirements for the use of generative AI

The legal requirements for the use of generative AI relate to data processing, data protection, data security, privacy and liability risks.

Data processing and data protection

- It is in the nature of generative AI that it often deals with large amounts of data. This results in the challenge that it is not possible in practice to explicitly filter out personal data such as email addresses, telephone numbers or similar relevant data such as names. When using generative AI, it must therefore be considered to what extent the transfer of this sensitive data is necessary or can even be avoided.

- If the input or use of personal data cannot be avoided, this should only be done in compliance with the GDPR. Only then can personal data be used in a legally compliant manner.

- As customers, suppliers or employees have a right to the disclosure of data or its processing in case of doubt, companies should handle this transparently and select trustworthy AI solutions. When working together, information about the use of AI should therefore at least be included in the privacy policy.

- The correct legal basis must exist in order to map these topics in a legally secure manner. On the one hand, this is prior, appropriate consent on the part of the user or a legitimate interest. As solutions such as amberSearch are introduced by the company, no individual consent needs to be obtained for company-wide solutions. Instead, companies must conclude a Data Processing Agreement (DPA) or order processing contract with an AI provider in which things such as subcontractors, technical and organisational measures (TOMs) etc. are clarified in order to adequately protect the contractor data.

Data security and privacy

- The aforementioned TOMs and the list of subcontractors are part of the data protection documentation and thus disclose how the AI provider implements the requirements of the GDPR. The classic topics are things like the frequency of password changes, access restrictions/controls or topics such as privacy by design.

- One point that companies that want to use AI themselves should carry out is a data protection impact assessment (DPIA). This is required if there is a certain risk to the rights and freedoms of natural persons.

Liability risks

One of the biggest points of discussion when using generative AI in companies is the question of liability. For example: Who is liable if incorrect decisions are made on the basis of an AI?

In individual cases, the companies using AI can be held liable. This is the case when it comes to producer, user or product liability or issues such as processing or data protection. Liability issues can arise due to blind trust in the AI output or a lack of consistency.

For example, website chatbots can pose a challenge as they speak on behalf of the company and can, for example, state warranty promises or features out of context.

However, if employees have the answer to a customer enquiry output by AI and do not check it carefully before sending it, both the company and the employee may be liable. To minimise such risks, users should receive extensive training.

By way of classification: If companies violate data protection regulations, official fines can generally amount to up to 4% of annual turnover. This makes it all the more important to implement a good and sustainable AI strategy.

In order to minimise liability risks, companies should therefore react proactively and take appropriate measures, such as the continuous review and auditing of AI systems.

You want to introduce AI in your company?

We’ve created a white paper that outlines best practices for integrating AI into your company.

It focuses on what really matters: connecting AI with your existing IT infrastructure and core knowledge — not just using AI on a superficial level.

Discover how to make AI truly valuable for your organization.

Download the white paper now:

The EU AI Act

The EU AI Act came into force in June 2024 and provides rules and regulations for the use and programming of artificial intelligence. The aim is to ensure the ethically responsible use of AI systems in the EU.

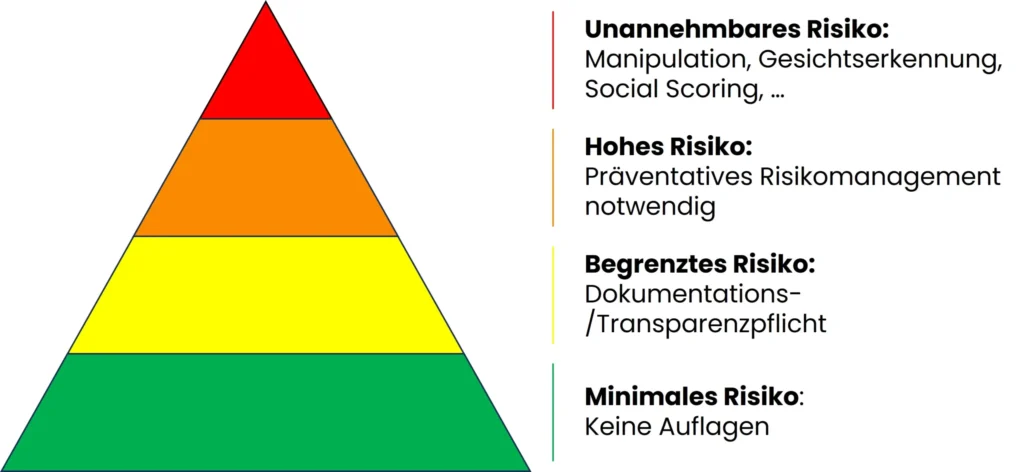

At its core, the AI Act divides AI applications into different risk classes, from which the safety requirements are then derived.

Graphic showing the risk categorisation of the EU CI Act

The risk classes should be categorised according to the scheme shown.

Depending on the classification, the hurdles for introducing AI in the company vary. The AI Act affects both companies that develop AI and those that want to make AI available to their customers. For example, the following things should be taken into account when developing AI systems:

- The prohibition of systems that classify people or so-called social scoring systems (unacceptable risk)

- The development of AI systems with limited risk should be subject to transparency and documentation requirements in order to understand how they work and increase trust.

In order not to inhibit AI innovation within the EU, the AI Act is a combination of innovation and safety in the development and use of AI. The EU’s recommendations for users are therefore as follows:

- Assessment of the AI application according to risk class

- Compliance with best practices

- Documentation for traceability

- Training and sensitisation

- Keep an eye on legislative changes

How can generative AI be made available to employees?

In principle, there are various technical ways in which AI can be made available to employees. These are, for example:

- Provision of a public AI model

- An own AI model

- AI solutions integrated into existing solutions

- AI assistants

- Or AI agents or generative AI search

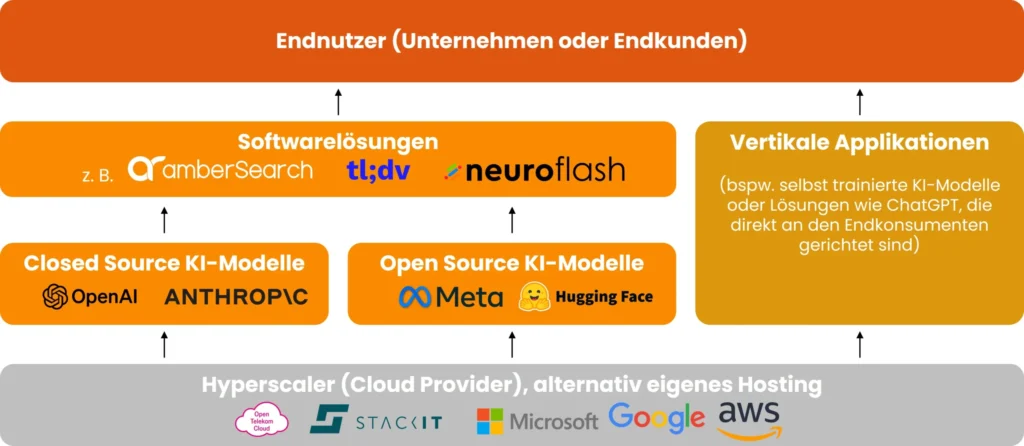

To be able to use these solutions, there are various ways in which they can be set up. Possible set-ups are shown here schematically with examples:

Development of the generative AI landscape

For most companies, however, hosting on their own servers does not make sense, as the costs (the hardware alone without installation is in the mid five-figure range and higher) do not allow a business case to be calculated for the use of AI solutions, especially for SMEs. This is why hyperscalers or cloud providers are usually used, which can be purchased as part of a private cloud or managed by the AI provider as part of classic cloud hosting. This blog article compares different hosting options.

Data storage and processing in the EU

Data storage and processing in the EU requires GDPR-compliant processing and storage. In essence, the following things should be considered:

Internal guidelines define how personal data is to be handled in AI systems. The lawfulness of data processing must be guaranteed. In particular, this includes the existence of a suitable legal basis, such as consent or a legitimate interest, as well as the corresponding data protection agreements. As it is often difficult to obtain separate consent in practice, the following measures must be taken:

- Closing of an data processing agreement (DPA) with the AI provider.

- Mention of the provider used in the privacy policy and documentation in the register of processing activities (sub contractors).

- Complete documentation of the data entered.- Reviewing and, if necessary, adapting the technical and organisational measures.- Regular implementation of user training.

- Ensuring the enforcement of data subjects’ rights through suitable processes.

Data processing and storage in the USA

In accordance with the EU-US Data Privacy Framework, US companies that are certified under this framework are able to host data in a legally secure environment.Nonetheless, this agreement is based on an agreement that can be cancelled at any time, meaning that investments made can no longer be used.Especially in geopolitically uncertain times, we are noticing that more and more companies are opting for sovereign solutions from Germany or the EU. As a result, companies can act in accordance with European values and have greater control over their own data due to various laws.

On-premise solutions/processing on own servers

Many of the challenges mentioned (e.g. AVV, TOMs, etc.) do not apply to on-premise solutions, as the company using them is then responsible for hosting the AI software. However, a lot of hardware resources and expertise are required to set up such systems. In addition, the AI solutions must be continuously developed and the hardware requirements can change with larger models.

AI and the use of amberSearch in companies

Much has been written about the legally secure use of AI in companies, which is why we want to position ourselves on this topic with amberSearch. With amberSearch, we have been developing an AI tool since 2020 that makes expertise from existing data silos quickly and easily usable. This is an example on how you could use amberSearch:

For us, it is particularly important to take existing access rights into account, but also to use our solution in compliance with the GDPR. We therefore pursue a trusted partner strategy, i.e. we only host our software where we know that European laws are fundamentally complied with.

Sounds exciting? Then we look forward to talking to you: