Knowledge graphs have dominated as a technology for years when it comes to making knowledge accessible in companies. Knowledge graphs are used to structure unstructured knowledge and make it retrievable. The word “knowledge management” has left a lot of scorched earth in many companies. However, large language models (LLMs) have become established in recent years. Companies that are considering becoming active in knowledge management (again) are therefore spoilt for choice: do they rely on an established technology or on a modern, evolving technology? Without taking a closer look at the two technologies, it is almost impossible to make an assessment.

Table of Contents

What are LLMs (if they are used for knowledge management)?

LLM stands for “Large Language Models”, i.e. large language models that are based on artificial intelligence and enable a broad understanding and generation of human-like language. When used in the field of knowledge management, they are used to organise, understand, process and generate information.

LLMs as we know them today (transformer models) were only developed in 2017. At that time, they were presented by Google at a scientific stage as so-called transformer models at the NIPS in Las Vegas. We at amberSearch have been working with this technology since 2020 and have already published several models. Since the release of ChatGPT by OpenAI in November 2022, the technology has developed even faster than before and companies are now using it on a broad scale.

In the context of knowledge management, LLMs can solve various use cases, which mostly include the following

- Semantic search: through their understanding of natural language, LLM’s can enable advanced semantic search. This means that they can not only search for keywords, but also understand the semantic context and deliver relevant results. amberSearch is an example of a solution for this use case.

- Question-answer systems: They can serve as the basis for question-answer systems by understanding natural language questions and generating precise answers. This enables efficient information retrieval and supports employees in quickly obtaining information. amberAI is an example solution for this use case.

- Information extraction and summarisation: LLMs can analyse large amounts of text, extract important information and condense it into concise summaries. This helps to process large amounts of data and filter out relevant findings. In this case too, amberAI is an example of a solution.

- Personalised recommendations: Based on user interactions and preferences, LLMs can make personalised recommendations for content or information by recognising patterns in the data and making appropriate suggestions. Such functions are also included in amberAI.

- Text generation and document creation: LLMs can be used to automatically generate reports, documents or articles by synthesising information from different sources and presenting it in understandable language.

If you want to find out more about the potential introduction of LLMs for knowledge management, you should read this blog post.

Advantages of LLMs in knowledge management:

- Language understanding and interpretation: LLM’s can understand and interpret information in natural language, making interaction with knowledge resources more intuitive and user-friendly.

- Up-to-dateness: By combining this with techniques such as Retrieval Augmented Generation or Multi-Hop-Question Anwering, LLMs always have access to the latest knowledge without having to be trained beforehand.

- Out-of-the-box systems: When used correctly, large language models work out-of-the-box and do not require individual training or tagging.

Disadvantages of LLMs in knowledge management:

- Interpretability and transparency: LLM’s decisions are based on complex models, which makes their decision-making processes less transparent and harder to understand.

- Distortions and misinterpretations: There is a possibility that LLM’s can generate biased or erroneous information due to their training data and algorithms, leading to incorrect interpretations or answers.

- High computing power and resource requirements: The use of LLMs requires significant computing power and resources to function effectively, which may not be available or cost effective for all organisations depending on the type of hosting.

What are knowledge graphs?

Knowledge graphs are an innovative way of visualising information and its relationships. Think of them as a giant network map that connects data points (such as people, places, concepts) to visualise their relationships and meanings.

They consist of entities (things or concepts) and their attributes (properties) as well as the relationships between the entities. For example: An entity could be “Eiffel Tower”, with attributes such as “height”, “year built” etc. and relationships to other entities such as “located in Paris”.

Knowledge graphs are considered a mature technology as they are based on the Resource Description Framework (RDF). This has received several updates over the years, with the last significant update being over 10 years old (2014).

What are the advantages of knowledge graphs?

- Structure: Provide structured information that is clearly defined and shows relationships between entities.

- Transparency: Provide a transparent model that is easier to understand as it explicitly shows the relationships between data points.

- Representation: Provide clear representations of knowledge that exists in a specific area or domain.

What are the disadvantages of knowledge graphs?

- Creation: Knowledge graphs are time-consuming to create as the relationships have to be defined once. The knowledge must be entered into predefined schemas, which does not make it a dynamic technology.

- Less flexibility: May not be as flexible as language models and may have difficulties with unforeseen queries. In addition, they are relatively difficult to adapt to different applications, as the “knowledge” then has to be redefined or redefined differently.

- Rigidity: Knowledge graphs are limited to the knowledge learnt and have difficulty integrating new or evolving information.

Technology decision: Comparison of LLMs vs. knowledge graphs in knowledge management

Now that it is clear what the advantages and disadvantages of LLMs and knowledge graphs are, the technologies are now compared for the use case of knowledge management.

Of course, there are significantly more success stories for the use of knowledge graphs than there are for LLMs. Not because LLMs are weaker, but because they are simply so new that they have not yet been integrated in depth in many companies. What LLMs are often criticised for, however, is that they often do not understand the context. But this is precisely where the flexibility of LLMs comes into its own. Thanks to techniques such as multi-hop question answering, retrieval augmented generation and the like, LLMs can decide for themselves how much more context they need in order to answer questions correctly on their own (keyword: autonomous agents). And in the event that the employee is not satisfied with the answer, they can simply ask further questions and have the relevant information generated. It is often a fallacy that LLMs first have to be trained with internal data. At amberSearch, we offer solutions that work out-of-the-box and without training.

Knowledge graphs also have their undeniable advantages: You know how they work and you can be sure that the answer will be correct – provided that the information defined in the graph is up-to-date and correct.

To determine the right technology, however, the right use case must first be defined. We have uploaded an example here to help interested parties define their use cases.

Development prospects and trends in the future

From our point of view, one thing is clear: if you want to work in a future-orientated way, you should rely on LLMs. The (development) potential of this technology is too great, especially if you look at the last few years. What many CTOs considered technically unfeasible a few years ago is now realisable. LLMs are becoming more and more efficient, which means that the high resource requirements can be reduced in the medium term. It is not without reason that more and more providers are focussing on LLMs and reducing the use of knowledge graphs.

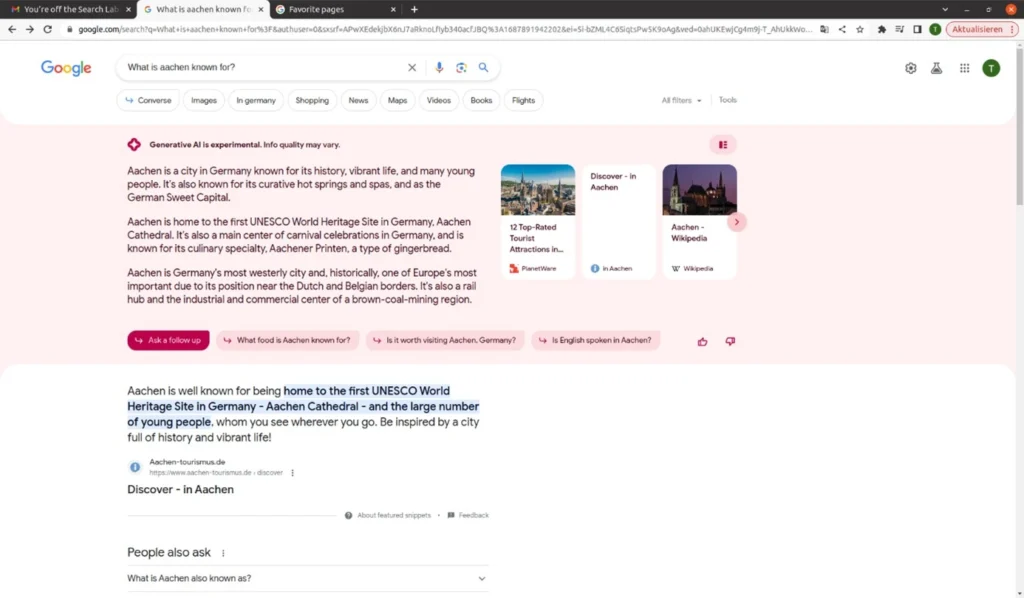

Google also uses knowledge graphs. We all know them as the information displayed on the right-hand side next to the search results. But: Google is experimenting with generative AI in its answers – generating much better answers than the current ones.

At the end of the day, you have to look at both technologies in such depth that you can realistically assess the potential added value and resource requirements and make a good decision based on the right use cases.