Companies have now realised that AI will have a lasting impact on business life. However, as quickly as the technology arrived, companies often lack the expertise and understanding of the technology to set realistic expectations. This blog article provides an insight into how generative AI is commonly used in companies.

Table of Contents

Before getting more technical, it’s important to understand the following: Software is only ever a means to an end and must solve a business problem. AI should not be used just because it is trendy, but because the added value is crystal clear and cannot be solved better technically. If there are other, technically equivalent solutions, then these are also perfectly legitimate. In order to assess the technology for your own use case, you should have a rough understanding of how generative AI works. The AI system presented here is the best solution for most use cases from a technical point of view, but this does not mean that other solutions are better for certain use cases.

How to combine generative AI with internal company data

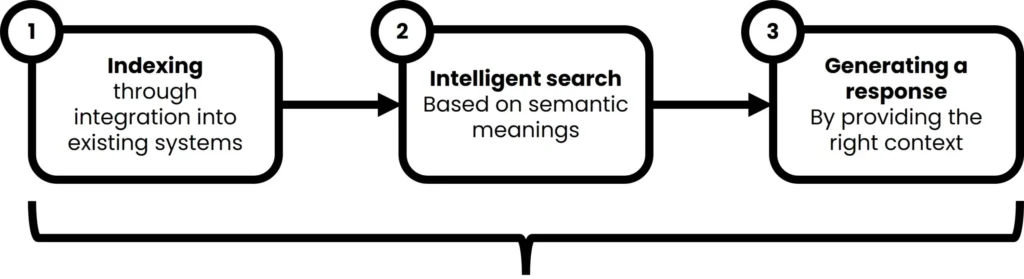

Most companies are now aware of the potential added value and use cases of generative AI. Nevertheless, you cannot simply train an AI model with your own data, as access rights would then be lost, new information would no longer be taken into account and a lot of hardware resources and technical expertise would be required for training (for more information, see blog post). In addition, an in-house AI model would also start to hallucinate, which is a no-go in a corporate context. This is why companies now rely on Retrieval Augmented Generation (RAG), which uses various AI models at different points. The process looks like this:

High Level procedure of a Retrieval Augmented Generation System (incl. Multi-Hop Q&A)

In order to combine generative AI with internal company data, an index (1) must first be created in which the company’s content is embedded. An intelligent search (2) is built on this and an answer can then be generated from these results (3). The following paragraphs will go into the technology and background in more detail.

How generative AI models work

Generative AI models are trained with the help of a large data set (from the Internet). This allows them to build up knowledge on all topics. Technically speaking, the information is stored in an n-dimensional space (vector index) as so-called embeddings. In this space, the content is “clustered” according to semantic relevance in particular, i.e. information such as “How much money has”, “How much wealth has” and “How rich is” are semantically equivalent and are therefore very close to each other. If a user now issues a prompt, it is not so much a question of which wording is used, but of the semantic meaning. Mathematical algorithms can then be used to deduce what other relevant information is. The answer is then calculated word by word based on a mathematical probability.

For individual humans, generative AI models appear very intelligent, as they have significantly more knowledge than individual humans. Nevertheless, they only reproduce what they have learnt. One challenge of generative AI models is that they start to hallucinate because they themselves can get confused by the large “amounts of data” and may combine information incorrectly.

Retrieval augmented generation to avoid hallucinations

Retrieval Augmented Generation has the advantage that no company-specific AI models need to be trained, as the work is primarily context-based. The basis for a RAG system is the intelligent search. The intelligent search initially delivers results that are relevant and accessible to the user (i.e. taking access rights into account), which are evaluated and ranked with the help of an AI (based on the semantic content). A generative AI is then set up based on this. A prompt is given to the generative AI model, which simply looks like this: “The user’s question is “XXX?” and the relevant results are “Result 1”, “Result 2”, “…”. Answer the user’s question based on this context.” So now the general intelligence of the generative AI model is used to reformulate the results passed as context instead of answering the question from the knowledge of the AI model. This avoids the hallucination problem. In addition, current documents and, for example, access rights can be taken into account by determining the context through the upstream search access.

An intelligent search is ALWAYS the basis

Intelligent search plays a fundamental role in a RAG system. It makes sense to use a modern enterprise search or a generative AI system that includes such a function (e.g. amberAI). If the search delivers poor results, then the generated answer will also be poor (keyword: shit-in, shit-out). At amberSearch, we therefore started training and publishing intelligent reranking models (based on LLMs) in 2020. One of our most successful models has now reached a mid-six-figure number of downloads and has been rated by several independent scientific papers as one of the most efficient of its kind.

Multi-hop question answering as the next expansion stage

The next expansion stage of Retrieval Augmented Generation is Multi-Hop Question Answering. In a standard RAG system, a search is formulated that can then focus primarily on one topic. However, if you try tasks such as “Write me a cold-call email to our buyerpersona CTO and name our USP’s”, then a standard RAG system will quickly reach its limits. In order to answer this question, information from various sources is required. Therefore, such a prompt is broken down as follows:

- Cold call email template

- Buyerpersona CTO

- Our USP’s

A multi-hop system first finds an answer for each step and then processes this into a final answer. This enables significantly more advanced and better quality answers than if the focus were on just one of these topics. If you want to use a future-proof generative AI tool in your company, you should ensure that such functions are also built into the software.

AI agents

Whereas a multi-hop question answering system decides in one step what the various steps should look like, AI agents are able to check results independently and execute several steps in succession. In development, at least autonomous AI agents are significantly more complex, which is why they are currently only used for relatively simple or very common use cases. We have written a detailed blog article about AI agents here.

Conclusion

The differences between such systems lie in the details and it is important to understand where the technical differences (and resulting limitations) lie with different providers. In our blog post Introducing generative AI, we looked at the various requirements that companies (should) have if they want to use generative AI themselves.