Everyone wants to use generative AI in their company, but only a few have a feel for the necessary value chain to be able to realistically estimate the effort and costs. This article is less about the actual introduction of generative AI – we have already written various articles on this. It is much more about the technical infrastructure in the background – the Generative AI stack.

Table of Contents

Definition of generative AI stack

A generative AI stack comprises all the resources and elements required to offer AI solutions to employees and companies.

A number of resources are required to use generative AI in the company in a meaningful way. The main resources are as follows:

- Hardware resources for training and operating the AI models

- The AI models themselves

- AI applications that make the AI usable

Of course, this list could be extended to include chips, which are of course part of the hardware resources, training data, which is required to train the AI models, etc. However, this article is intended to help companies that use AI but do not have in-depth knowledge to develop an understanding of the necessary infrastructure.

Hardware resources

Software requires hardware in the form of servers in order to be operated. Everyone has known that this is particularly the case with large language models (LLMs) since NVIDIA’s share price went through the roof.

On the one hand, hardware resources are important for the initial training of AI models. Even if it is not one hundred per cent clear how expensive the training of an AI model is, it is assumed that current LLMs are above the 100 million dollar mark.

On the other hand, the hardware resources are needed to operate the AI models. The most resource-intensive part of this is the vectorisation of the information. This is necessary to make the data ‘readable’ for AI models.

AI models

AI models in themselves are merely a technology. In principle, there are different types of AI models, but as of 2024, the general public primarily understands AI to be applications relating to LLMs. This is why the focus of this article is exclusively on this type of AI.

There are 2 ways to obtain AI models: Open source models are available for free download, while closed source models are operated by the manufacturer and can be used via API calls.

AI applications

AI models are merely a technology and a technology without a use case does not solve any problems. However, in order to be able to use AI models sensibly, specific challenges are required for which AI represents the best solution approach. One type of application for which AI is the best technical solution is an enterprise search, for example. An enterprise search helps employees to quickly and easily access internal knowledge within the company.

Applications therefore form the interface between AI models and users and make the technology ‘usable’.

Integration of AI in the company

For many companies, integrating their own data into an AI model is very important in order to improve their own knowledge management through AI. There are various ways in which AI can be used in a company – for example, integrated AI solutions, AI assistants or in the form of AI agents. These are explained in more detail in this blog article.

One question that comes up again and again is whether information should be hosted in the cloud or on premise. In general, there is already a blog article on this that also looks at the option of a private cloud.

Nevertheless, companies – especially SMEs – that are considering the use of AI should bear in mind that an extremely large amount of hardware resources are required to host AI models themselves. A cloud solution makes it much easier to map these costs – without jeopardising the business case.

Visualisation of the generative AI stack

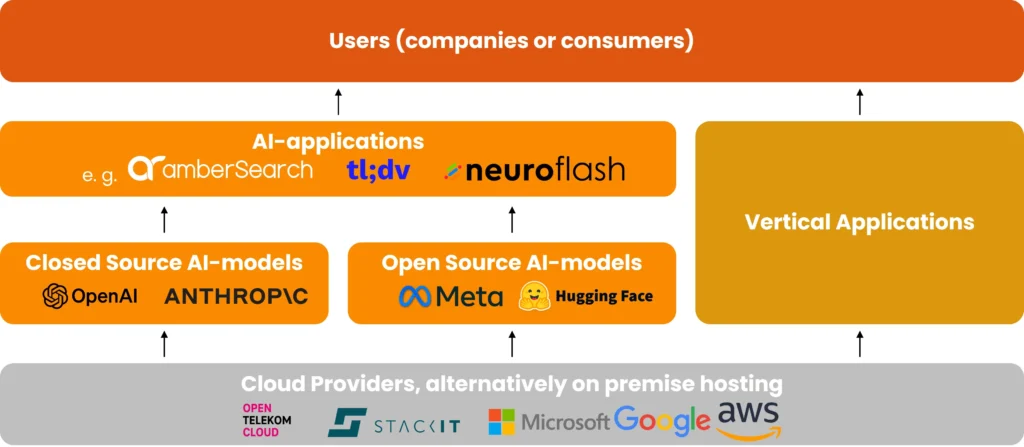

If you take the previous findings and visualise them, you would end up with a graphic similar to this one:

Overview of the generative AI value chain

One type of solution that we have not yet discussed is vertical applications. For example, OpenAI offers a vertical application – on the one hand, the AI model (e.g. GPT 4o) in the background and the application itself with ChatGPT. This enables OpenAI to directly map several steps of the value chain.

Best practices for implementing a generative AI stack

Implementation as an on-premise or private cloud solution is not recommended, especially for medium-sized companies. They often lack the knowledge to support and maintain these sophisticated resources. In times of demographic change, it is not only the shortage of skilled labour that speaks in favour of using a cloud solution, but also the business case.

What the actual implementation looks like depends heavily on the use case and the chosen solution. Nevertheless, we have created an overview of the basics of AI integration in this blog article. Interested parties can also take a look at our checklist for AI implementation and the technical basics of AI implementation.

Conclusion on the Generative AI stack

The Generative AI stack in the company is significantly more resource-intensive than conventional software solutions. The generative AI stack in the company is significantly more resource-intensive than conventional software solutions. The introduction and operation of generative AI requires a well thought-out infrastructure that includes both hardware resources and specialised software. Companies, especially SMEs, should be aware of the significant investment required to train and operate AI models.

Opting for cloud solutions can be the appropriate choice in many cases, as they not only optimise hardware and maintenance costs, but also provide access to sophisticated technology without the need for in-depth in-house expertise.