The EU’s AI Act (s. AI Regulation) came into force in August 2024. Further phases will be gradually activated over the next few months, including the obligation to provide AI training, for example. This blog article explains the AI Act, provides an overview of the various phases of the AI Act and offers possible solutions for how companies can proceed to implement the requirements of the regulation.

Table of Contents

The AI Act

The European Union’s AI Act (Artificial Intelligence Act) is a law that aims to regulate the development and use of artificial intelligence (AI) in the EU. The proposal was first presented by the European Commission in April 2021 and came into force on August 1, 2024. The aim of the AI Act is to strike a balance between promoting innovation and ensuring the safety and fundamental rights of citizens with regard to AI.

Initially, the entry into force of the AI Act had no direct impact on companies and the use of AI. Nevertheless, the AI Act provides for a multi-stage implementation plan (Art. 113 AI-Act), which does have an impact on companies.

Phase 1 of the AI Act | February 2, 2025: AI training & solutions with unacceptable risks

The first phase of the AI Act was reached on February 2, 2025 in accordance with Art. 113 of the AI-Act. From this date, the following requirements will come into force:

- AI systems with unacceptable risks will be prohibited (Art. 5 AI-Act)

The AI Act divides AI solutions into different risk classes. In the next phase, the AI solutions with the greatest (= unacceptable) risk will be banned. These include, for example, systems with the following functions

- Exploitation of weaknesses of particularly vulnerable people

- Evaluation of social behavior (social scoring)

- Cognitive behavior manipulation

- Biometric real-time remote identification (with a few exceptions)

- Biometric categorization

- Development of AI competence becomes mandatory (Art. 4 AI-Act)

To ensure that AI is used responsibly and within the right framework, providers and operators of AI systems must develop internal AI competencies.

- This applies to all organizations that use AI, regardless of the level of risk posed by AI.

- Employees and processors must have sufficient AI skills.

- Training and education must be provided where necessary.

Note: The second requirement in particular affects all companies in particular, as companies that use solutions such as Microsoft Copilot, amberSearch or ChatGPT on a daily basis are also affected here and must be able to provide evidence of employee training.

Phase 2 of the AI Act | August 2, 2025: High-risk systems, general-purpose AI models

In principle, Phase 2 includes the enforcement of Chapter III Section 4, Chapter V, Chapter VII and Chapter XII as well as Article 78 will apply from August 2, 2025, with the exception of Article 101. This results in further, extended requirements for operators of high-risk AI systems and developers of general purpose models:

- High-risk AI systems (Chapter III AI-Act)

As described in this article, the AI Act divides AI systems into different risk levels. After AI systems with unacceptable risks were already banned in Phase 1. In order to review the mechanisms introduced, Chapter 3 introduces various control bodies that countries must maintain from this date to monitor implementation. The following apply to high-risk AI systems

- Risk assessment and quality management: Companies must ensure that their high-risk AI systems comply with the regulations in terms of transparency, traceability and safety.

- Conformity assessment: A conformity assessment must be carried out before the market launch in order to check compliance with the requirements.

- Documentation requirements: Developers and providers must provide comprehensive technical documentation describing, among other things, how the system works, safety measures and potential risks.

- AI models with a general purpose (Chapter V AI-Act)

In principle, the requirements for AI model providers within the EU are increasing – particularly with regard to training data, use and testing or approval of the systems. In concrete terms, this means

- Companies that develop AI models without open source code must disclose how the AI was trained and which tests were developed to develop the AI.

- In addition, providers of such AI models must name a contact person to the EU who will be the point of contact for queries of various kinds.

- Providers of general AI models are obliged to carry out certain standard protocols and tests to evaluate the AI model before publishing AI models.

Phase 3 of the AI Act | August 02, 2026: the majority of the AI Act comes into force

The majority of the AI Act comes into force on this date.

- Transparency and labeling obligation (Art. 50 AI-Act)

The transparency and labeling obligations for AI systems intended for direct interaction with humans come into force. This applies in particular to generated audio, image, video or text content.

Phase 4 of the AI Act | 02 August 2027: Full validity of the AI Act & clarification of the classification of high-risk systems

- Classification rules for high-risk AI systems (Art. 6 AI-Act)

Article 6 of the AI Regulation defines specific requirements and conditions for high-risk AI systems. This classification is independent of whether the AI-system are operated in combination with a product or independently. The following main criteria apply:

- Safety component or stand-alone product: an AI system is classified as high-risk if it is used as a safety component of a product listed in Annex I or is itself such a product.

- Conformity assessment: High-risk AI systems must undergo third-party verification before they can be placed on the market or put into operation.

- The AI Act will become fully applicable on August 2, 2027

On this date, the AI Act will become fully mandatory for all companies within the EU. This also includes, for example, that GPAI (General Purpose AI) models that were launched on the market before August 2, 2025, must be AI Act-compliant.

Classification of risk categories according to the AI Act

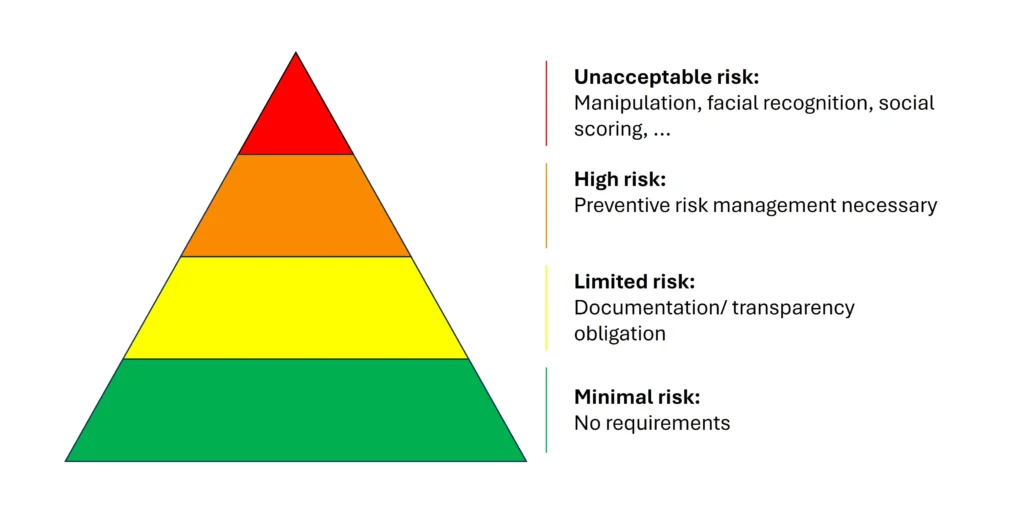

The AI Act divides AI systems into different categories based on their risk to health, safety and fundamental rights. Systems with an unacceptable risk are prohibited, while high-risk systems are subject to strict requirements such as risk management and conformity assessments. Systems with limited risk are subject to less stringent

Risk classes of the AI Act

The following solutions can be seen as examples per risk category:

- Unacceptable risk: Social scoring by state authorities, manipulation systems to control behavior, uncontrolled biometric surveillance such as facial recognition in public spaces.

- High risk: AI in healthcare (diagnostic tools), AI-supported screening systems for job applications or education, or AI in critical infrastructure (e.g. energy management).

- Limited risk: Chatbots or AI-supported recommendation systems on online platforms.

- Minimal risk: AI-supported spell checking, music recommendations or personalized advertising without sensitive data reference.

Compliance with the AI Act – what companies need to do now

From February 2, 2025, companies will be obliged under the EU AI Regulation (AI Regulation) to train their employees in the use of artificial intelligence (AI). This is currently the biggest impact for companies that use AI, as failure to comply may result in fines.

Why is AI training important?

The introduction of the AI Regulation aims to ensure the responsible use of AI systems. Companies must ensure that their employees have sufficient knowledge of how AI works, how it can be used and the risks involved. This not only promotes compliance, but also strengthens trust in AI-supported processes.

Contents of effective AI training for companies

Comprehensive AI training should cover the following topics:

- Basics of artificial intelligence

- Introduction to the functioning of AI systems

- Overview of different AI models and their training methods

- Possible applications of AI in different business areas

- Opportunities and risks of AI systems

- Legal and ethical aspects

- Detailed explanation of the EU AI Regulation, including risk classification and impact on everyday working life

- Legal obligations and compliance requirements for companies

- Discussion of ethical issues in dealing with AI

- Data protection, copyright and liability issues in the AI context

- Practical application

- Teaching the basics of using generative AI

- Practical exercises with common AI tools and platforms

- Training in effective “prompting” for the use of AI systems

The training should be completed with a test and the receipt of a certificate, so that the training can also be proven.

amberSearch & the AI Act

In 2020, amberSearch began developing an AI-based, in-house search engine that helps employees to quickly access relevant, internal expertise. Ever faster growing amounts of data, more and more data pools and the loss of knowledge carriers due to demographic change mean that it is becoming increasingly difficult to work efficiently with information within the company. Instead, there is a lot of searching, reworking or only superficial rather than in-depth work, as time is lost on irrelevant “search tasks”. With amberSearch, employees have a simple and suitable solution.

We have been working with (partly self-developed) large language models since 2020 and took the GDPR-compliant and responsible use to heart long before the AI Act came into force. Our solution is now used by over 200 customers, where we have regularly provided AI training and courses. We have also made a lot of knowledge available free of charge on our blog in order to increase knowledge about AI in society. We would also like to share this knowledge with other companies. We have therefore developed a training course based on our knowledge, which covers all the important content in accordance with the AI Regulation. Feel free to contact us.

Contact form

Do you have questions about the AI Act or AI training? Then you can easily contact us here. We look forward to hearing from you: